[2023]

[2022]

[2021]

[2020]

[2019]

[2018]

[2017]

[2016]

[2015]

[2014]

[2013]

[2012]

[2011]

[2010]

[2009]

December

November

October

September

August

July

June

May

April

March

February

January

[2008]

[2007]

[2006]

[2005]

[2004]

[2003]

[Fri Jul 17 16:14:53 CEST 2009]

Now, here is an interesting incident that just happened to me at work this morning. My manager called us all to a meeting by sending out an email using Microsoft Outlook. I noticed the email message contained the following information:

So, here is the issue: neither of those cities is in the GMT timezone right now, as stated in the email. Actually, London is in the BST right now. So, unless one understands that whichever time people have in London is always GMT (which is obviously incorrect), the information sent out by the Microsoft Exchange Server was wrong. Yet, when I asked for a clarification as to the actual time we would convenet at, I was given the right answer together with an explanation that Outlook clients don't have that problem because they automatically correct the error. In other words, I have to buy that the problem is not that the MS Exchange Server is doing things incorrectly, but rather that the client should also have the same bug and understand that the information the server is sending is incorrect. Since I don't use Microsoft products, it sounds as if it's my fault, after all. It certainly reminded me of an interview with Samba developer Jeremy Allison I read sometime ago where he explained how, while writing the code for Samba, he had to also write the bug sthe protocol had. Otherwise the whole thing would break. Nice way to do software engineering, huh?

When: 17 July 2009 14:30-15:30 (GMT) Greenwich Mean Time : Dublin, Edinburgh, Lisbon, London.

[Tue Jul 14 11:00:10 CEST 2009]

I can hardly believe I am going to say this but I must acknowledge I am starting to see some usefulness in Twitter. Yes, I know, I know. It does look like an absolute waste of time. Who cares about a bunch of egotistically chatty people continuously publishing messages about every single moronic thing they do: "I am having breakfast now", "Gee! I hate nuts!", "Wouldn't you rather be at the beach somwhere?" and similar. Of course, from that point of view, Twitter would be quite useless (although I must say I've used Facebook in a very similar manner, to report all my daily moves, when my wife had to travel to the US for a quick visit and I stayed back home with the kids: it was just an easy way for her to see what we were up to from a distance). You then come across news reports like Time's How Twitter Will Change the Way We Live and can hardly be blamed for thinking that it's all overhyped stupidity. So, what made me change my view on this tool? Consider this, taken from the article published by Time just mentioned;

Now, this is a different way to do things! As a matter of fact, it's no different than the way I meet my co-workers on the inernal IRC room to chat about the quarterly All Hands meeting. The top execs give their speech and, in the meantime, regular employees synthesize what they say, add links to related documents, comment on what is being said or joke about it. In other words, they create a conversation from what it originally was a simple speech. Every single time I hear of an interesting conference or speech these days, I check and see if there is a conversation going on about it on Twitter. Just a few years ago, I simply had no access to whatever was said there, unless I was invited. Does any of this matter? It depends on how much you value information, I suppose. Still, I now regularly check Tim O'Reilly's tweets, where I find lots of links to interesting articles almost on a daily basis. {link to this story}Earlier this year I attended a daylong conference in Manhattan devoted to education reform. Called Hacking Education, it was a small, private affair: 40-odd educators, entrepenurs, scholars, philanthropists and venture capitalists, all engaged in a sprawling six-hour conversation about the future of schools. Twenty years ago, the ideas exchanged in that conversation would have been confined to the minds of the participants. Ten years ago, a transcript might have been published weeks or months later on the Web. Five years ago, a handful of participants might have blogged about their experiences after the fact.

But this event was happening in 2009, so trailing behind the real-time, real-world conversation was an equally real-time conversation on Twitter. At the outset of the conference, our hosts announced that anyone who wanted to post live commentary about the event via Twitter should include the word #hackedu in his 140 characters. In the room, a large display screen showed a running feed of tweets. Then we all started talking, and as we did, a shadow conversation unfolded on the screen: summaries of someone's argument, the occasional joke, suggested links for further reading. At one point, a brieg argument flared up between two participants in the room —a tense back-and-forth that transpired silently on the screen as the rest of us conversed in friendly tones.

At first, all these tweets came from inside the room and were created exclusively by conference participants tapping away on their laptops or BlackBerrys. But within half an hour or so, word began to seep out into the Twittersphere that an interesting conversation about the future of schools was happening at #hackedu/ A few tweets appeared on the screen from strangers announcing that they were following the #hackedu thread. Then others joined the conversation, adding their observations or proposing topics for further exploration. A few experts grumbled publicly about how they hadn't been invited to the conference. Back in the room, we pulled interesting ideas and questions from the screen and integrated them into our face-to-face conversation.

[Mon Jul 13 14:01:51 CEST 2009]

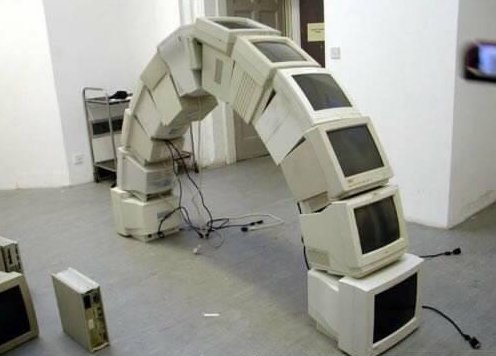

A good friend sent me this picture of what a bored sysadmin can do:

{link to this story}

[Mon Jul 13 13:34:27 CEST 2009]

The cartoon by Erlich published by the Spanish daily El País today is quite funny:

Translation:

— Yes, the rent is a bit higher... but take into account that it's a brand design.

Of course, we all know there are other reasons to run Apple, especially when compared with Windows. It's not only the design that justifies the higher price, but also the better integration of the whole product. I'm a big http://en.wikipedia.org/wiki/Linux">Linux user and fan, but there is no doubt in my mind that the Mac OS X is a much more polished product than either Linux or Windows. Sure, what you gain in usability you lose in freedom to purchase products from different vendors and the price tag is also a bit higher. In the end, that's the customer's choice. I don't see anything wrong with that. But, to some extent, the message the cartoon is sending is not accurate, since it gives the impression that Apple only sells hot air in the form of a cool design, when in reality it sells so much more than that. Mind you, just a few years ago I'd have also sided with those who think that Apple is full of it and simply charges more because it can. But then we bought a MacBook Pro for my wife, and loved it so much that we also ended up getting a regular MacBook for the kids too. Not dealing with the constant headaches that either Windows or Linux give you is well worth the price. Honest.

So, why do I continue running Linux most of the time? For several reasons: it still gives me a power and a flexibility that I don't get with an Apple. In the case of Linux, you can mix and match the software. You have lots of choices, as far as the type of window manager or desktop environment you can run. It's also easier to customize things here and there, since you can usually access the actual configuration files and edit them directly with a simple text editor. Finally, it also makes me feel good for philosophical reasons. I suppose what I mean is that there are also good reasons to run Linux. However, that doesn't mean that I should poke fun at Apple for charging some extra bucks for providing a nicely integrated product. I think it's defintely worth the price. {link to this story}

[Fri Jul 10 15:55:02 CEST 2009]

I must admit I've never been a big fan of Solaris. However, listening to a recent podcast of the FLOSS Weekly show on OpenSolaris, I must admit that a few of the technologies contributed by the project do sound quite exciting: to the already well known DTrace debugging tool and ZFS filesystem (this one with some very exciting features, such as the one that allows the user to take snapshots and do copy-on-write clones), one should now add Crossbow, still under heavy development, which implements network virtualization. All in all, OpenSolaris does look like an exciting technology, at least for the server. The desktop is a completely different story, as it became evident during the show. Yes, there are people who run the OS on their laptops, but if you thought Linux's problems with the drivers was bad, wait until you try OpenSolaris. {link to this story}

[Thu Jul 9 16:29:08 CEST 2009]

Yesterday's big technology news without any doubt were Google's announcement of the newly fanged Chrome OS sometime in 2010:

So, why should we care? Is it truly that important that Google is now about to release its own operating system? How many other attempts to unseat the Microsoft behemoth have we seen before? Well, this is no standard operating system:Google is developing an open-source operating system targeted at internet-centric computers such as netbooks and will release it later this year, the company said Wednesday.

The OS, which will carry the same "Chrome" name as the company's browser, is expected to begin appearing on netbook computers in the second half of 2010, Google saind in a blog post. It is already talking to "multiple" companies about the project, it added.

The Chrome OS will be available for computers based on the x86 architecture, which is used by Intel and Advanced Micro Devices (AMD), and the Arm architecture.

In other words, that Google is shooting for a truly universal operating environment (let's use that term to differentiate it from the traditional operating system), something completely independent from the underlying OS. In other words, you can run the same applications and access the same data from any system in the world. You can be at home, at work, in Mexico or Swaziland. It is all indifferent to you. Your applications, your email, your documents follow you wherever you go. This is the old vision of Jamie Zawinski, Marc Andreesen and other Netscape folks. The problem is that back when they first formulated the idea it was technically impossible to apply. Not only did we not have the technology readily available, but people's mentality wasn't there either. Scott McNealy's "the network is the computer" was a cool idea that showed up in most covers of the industry magazines, but the end user still hadn't caught up with it. Up until quite recently, people felt squirmish about trusting their data and applications to a provider. Not only did they have concerns about privacy, but they also didn't like the idea of a network-centric existence. Well, all that has changed in the last few years. Most people I know don't use POP email addresses any more. They prefer webmail, and have also moved their calendars, todo lists, home finance management and lots of other things to the web. Sure, ISPs still provide email accounts and homepages, but few people uses them. Instead, everyone has moved to webmail, Facebook, MySpace, Remember the Milk, Expensr and similar. Heck, people don't even listen to music from their own disks anymore... unless they do it on their iPods. Instead, services like Spotify have come to replace them. Suddenly, it's all social and on the network. Obviously, the next step is to also move your operating environment to the cloud. It's all far more in tune with a mobile, permanently in-flux world.The heart of Chrome OS is the Linux kernel. Applications, which can be written in standard Web programming languages, will run inside Google Chrome in a new windowing system. They will additionally run inside the Chrome browser on Windows, Mac or Linux machines, meaning that a single application could run on almost any computer.

So, what do we think about this trend? In principle, I think it's simply unstoppable. It's just the spirit of the times. Any attempt to go against it will be defeated. However, is it good to have a single vendor (in this case, Google) be in control of the new environment? I don't think so. In that sense, I'm sort of torn about the whole thing. On the one hand, I want Google to pull this one out because I am convinced the new computing model they are standing for is the best. On the other hand, I do want to see some alternatives to Google, even if I personally may choose to stay with them as my vendor of choice. Or, to put it another way, I don't want to replace Microsoft and the desktop-centric paradigm with Google and the network-centric paradigm. Choice is good. {link to this story}

[Fri Jul 3 16:18:44 CEST 2009]

A funny story that nobody should miss: Giving up my iPod for a Walkman. What if a 13-year-old were to swap his shiny iPod for an old Walkman for a whole week? {link to this story}