[2023]

[2022]

[2021]

[2020]

[2019]

[2018]

[2017]

[2016]

[2015]

[2014]

[2013]

[2012]

[2011]

[2010]

[2009]

December

November

October

September

August

July

June

May

April

March

February

January

[2008]

[2007]

[2006]

[2005]

[2004]

[2003]

[Sun Sep 27 13:38:37 CEST 2009]

Ars Technica publishes a review of Sidewiki, the latest product announcement by Google. Overall, I must say I have to agree with the criticisms:

To put it simply: while I think Google Sidewiki may be an excellent idea when access to the comments is limited to a group of users, chances are it's a recipe for a disaster when opened to everyone and anyone, as it curently is. In other words, I can see how a tool like this culd be extremely useful for a group of people who are engaged in collaborative research, for instance. The same applies to a group of friends who have similar interests and rely on each other's commentaries to find out about certain things. But that would require adding some features to the tool as it currently exists, since it is now opened to everyone without any sort of editing. As such, it is wide open to abuse by the usual morons. {link to this story}It's unclear if the service will really deliver a lot of value. After surfing around the Internet with it for a short while and looking at the annotations that have already been posted on popular websites, I have yet to see any that are really useful or substantive. It appears as though most users don't even really know what to post yet. The quality of the content could increase as use of the service becomes more widespread.

(...)

This new offering from Google is intriguing in some ways and it shows that the company is thinking creatively about how to build dialog and additional value around existing content. The scope and utility of the service seems a bit narrow. The random nature of the existing annotations suggest that the quality and depth of the user-contributed content will be roughly equivalent with the comments that people post about pages at aggregation sites like Digg and Reddit.

What makes Wikipedia content useful is the ability of editors to delete the crap and restructure the existing material to provide something of value. Without the ability to do that with Sidewiki, it's really little more than a glorified comment system and probably should have been built as such. As it stands, I think that most users will just be confused about what kind annotations they should post.

[Sun Sep 27 13:31:01 CEST 2009]

While reading a discussion on Barrapunto (the Spanish equivalent of Slashdot) about HTML editors for Linux I learn about Aptana Studio. I had never heard of this app before, but it certainly looks interesting. It provides a complete web development environment for HTML, CSS and JavaScript, along with plenty of plugins created by its user community. These plugins provide support for, among many other things, Ruby on Rails, Python, PHP, Adobe AIR and much more. It's also possible to run it as a plugin for Eclipse. It may be well worth a try. {link to this story}

[Wed Sep 23 14:21:44 CEST 2009]

I read on the eWeek website that Google has released a new Java-like programming language (Java-like at least in the sense that it runs on a the Java Virtual Machine):

I'm not sure we needed just one more programming language. On ther other hand, Ruby is pretty new and has been quite successful. It's only that one cannot avoid to be somehow skeptical when reading once again the promis of promoting readability over features. Prety much all languages promised that when they started, but then they grow and have to respond to the demands from the community that uses them. I don't see why Noop should be any different. {link to this story}"Noop (pronounced 'no-awp', like the machine instruction) is a new language that attempts to blend the best lessons of languages old and new, while syntactically encouraging industry best-practices and discouraging the worst offenses," according to a description of the language on the Noop language Website

Noop supports dependency injection in the language, testability and immutability. Other key characteristics of Noop, according to the Noop site, include the following: "Readable code is more important than any syntax feature; Executable documentation that's never out-of-date; and Properties, strong typing, and sensible modern stdlib."

[Fri Sep 18 15:05:47 CEST 2009]

Barrapunto, the Spanish equivalent of Slashdot, published a few days ago a story about alternatives to Apple's Time Machine for Linux that helps realize why Linux still is not a part of the mainstream. The thing is that, whatever one may thing of Apple, its Time Machine backup software kicks a**. It's easy to configure and use, and it does its job pretty well. What's the alternative in Linux? As the original poster says, there are several projects (TimeVault, FlyBack, Backin Time...) but there are a few problems with them: first, they are not nearly as rich as Time Machine; second, active development seemed to stop sometime in 2007; and third, let's face it, they're just copycats. The fact is that, for one reason or another, backup on Linux is still limited to customized shell scripts binding together the usual suspects (tar, gzip, rsync...) or command-line tools. Hardly the ideal situation for most mainstream users. {link to this story}

[Fri Sep 18 15:00:08 CEST 2009]

Check out this cool optical illusion grabbed from the Seed Magazine website. Just stare at the red dot for a while.

{link to this story}

[Wed Sep 16 11:59:17 CEST 2009]

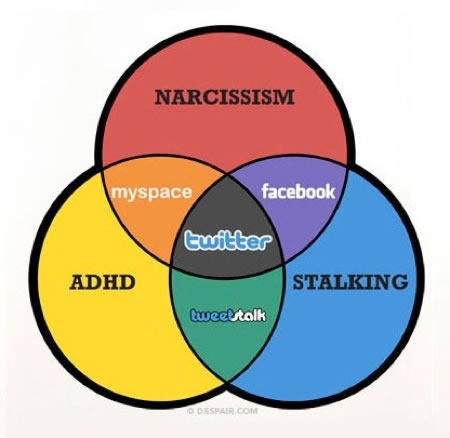

And here is yet another original Venn diagram, in this case applied to social networks:

This other one came from the Global Nerdy website. {link to this story}

[Wed Sep 16 10:56:17 CEST 2009]

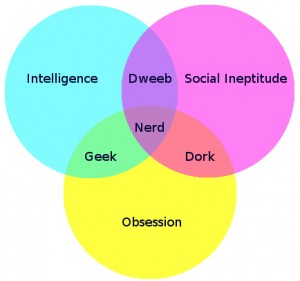

Haha! I've been waiting for a long time for a graphical (i.e., easy to understand) explanation of the difference between a geek and a nerd, which I have to explain to people every now and then. Well, here it is! Mike Brotherton has published the Nerd Venn Diagram:

{link to this story}

[Wed Sep 16 10:46:36 CEST 2009]

How often do you search the Web to find the answer to a particular question? Plenty of times, right? Me too. From who is the director of that movie to how to clean certain stains from my clothes. Most of the time, the search engine returns information from Yahoo Answers or AnswerBag close to the top. Well, Dave Pogue tells us about Aardvark, supposedly a "new, better way to harnes the Net for answers". I must say the idea sounds pretty good, but I haven't tried it yet. {link to this story}

[Fri Sep 11 19:48:21 CEST 2009]

While listening to the SFF audio podcast, one of the hosts explains that when he needs to calculate a ratio he simply uses PhotoShop or the GIMP. Hmmm, that's an idea. {link to this story}

[Fri Sep 11 15:14:17 CEST 2009]

What the...? According to this entry on Internet News, Microsoft is getting ready to launch a new open source foundation. A spinoff of their CodePlex website, the new foundation will include Miguel de Icaza on its Board of Directors.

Please, pinch me. I'm not dreaming, right? Is this the same Microsoft that used to disparage the open source movement as a bunch of Commies? Anyways, I have to agree with the author of the article for Internet News. There are already plenty of open source foundations out there. Do we truly need one more? Or is it that Microsoft wants one that it can control? {link to this story}A Microsoft FAQ on the new foundation notes that Codeplex was started in 2006 as a project hosting site that met the needs of commercial developers. The Foundation is related but is a separate effort.

"The Foundation is solving similar challenges; ultimately aiming to bring open source and commercial software developers together in a place where they can collaborate," the foudnation FAQ states. "This is absolutely independent from the project hosting site, but it is essentially trying to support the same mission. It is just solving a different part of the challenge, a part that Codeplex.com isn't designed to solve."From a larger point of view, Microsoft's new open source foundation is taking aim at a real problem. That is the problem of participation. Sure there are lots of organiztion that contribute to open source today, but there is still room for more.

"We believe that commercial software companies and the developers that work for them under-participate in open source projects," Microsoft stated.

[Fri Sep 11 11:59:23 CEST 2009]

I must say I had no clue the Apache Software Foundation (ASF) was involved in so many different projects, other than the well known Apache HTTP web server software. However, while checking out this presentation from eWeek on 11 Apache technologies that have changed computing in the last 10 years I find out about projects such as Cocoon, Apache Directory Server, Apache Geronimo, CouchDB, Harmony, Hadoop or Lucene. Likewise, I truly didn't know that SpamAssassin was actually one of their projects, although I did know that Ant was. It seems as if the Apache Incubator has been working at full steam for quite sometime now. {link to this story}

[Thu Sep 10 15:23:43 CEST 2009]

A friend passed me a link to an article published by The Register about Microsoft selling some Silicon Graphics patents. Apparently, they needed to cash in on some of their intellectual property and decided to sell these to the Open Invention Network (OIN) after approaching other organizations that showed no interest in the deal. Now, all this is pretty normal, I think. What I found peculiar is the concept of non-practicing entities discussed in the article:

Ain't that great? A non-practicing entity. In other words, an entity (a business) that does absolutely nothing to benefit society at large. It just figures out the way to squeeze money by applying what could be termed as legal bribery. I wonder how many of these "firms" just pocket the money without even going to court, just from threatening to do so and forcing the other party to settle. Not so different from the way the Mafia acts, huh? {link to this story}Microsoft is understood to have approached different organizations in an attempt to sell the 22 [patents], including companies euphemistically known as non-practicing entities, or patent trolls. Such companies typically hold patents with a view to making money through enforcement.

[Thu Sep 10 15:10:17 CEST 2009]

Now, you could see this one coming. All you had to do was check out your Facebook or Twitter account to see all those friends making comments about whatever it is they happen to be watching on TV at the same time they enter them in the status box. But what changes can we expect as a consequence of this multitasking trend in media consumption? I'd imagine TV shows will become more and more superficial, since multitasking won't allow viewers to invest much time on character recognition and build-up. So, expect more action (à la Hollywood movies) and characters whose personalities are even less defined. On ther other hand, it will also be interesting to see how the scripts may adapt to the viewers' comments, and perhaps the depth that will be missing from the show itself will move to the online forums, where people can discuss what is going on on the screen and talk about it from different viewpoints. Just think of the possibilities this could have for a documentary, for example. And how about the news? {link to this story}Americans are notorious multitaskers in the workplace, but the latest report out of Nielsen shows that we're increasingly multitasking when it comes to media consumption as well. The amount of time spent watching TV (live or time-shifted), watching vide on the Internet, using a mobile phone, and even watching video on a mobile phone has all increased. And, because days are (unfortunately) not elastic, the firm attributes this increase across all mediums to people doing more and more at the same time.

(...)

Where are we getting all of that time? We are apparently overlapping it. A full 57 percent of US Internet users reported browsing the Internet and watching the TV simultaneously —an activity that was largely unheard of in your average home even five years ago, but is relatively common these days. On average, these people spent about 2 hours and 39 minutes per month doing these activities together, with almost a third of their Internet time being spent in fron of the TV. "This simultaneous activity is one reason we see continued growth of both Internet and TV consumption," wrote Nielsen.

[Tue Sep 8 11:37:19 CEST 2009]

New Scientist has published an interesting article on evolution applied to the world of technology written by W. Brian Arthur, author of The Nature of Technology:

Arthur also discusses an interesting experiment carried out at the Santa Fe Institute in New Mexico to test all these ideas that seemed to prove them right: starting with a primitive technology (a simple NAND circuit) a computer managed to combine copies of it until it put together more complex circuits (an 8-way-exclusive-OR, 8-way-AND, 4-bit-Equals, and even an 8-bit adder, which is the basis of a simple calculator). {link to this story}Barely four years after the publication of Darwin's On the Origin of Species, the Victorian novelist Samuel Butler was calling for a theory of evolution for machines. Since then, a few hardy souls have attempted to oblige him, but none has quite hit the mark. Their reasoning, very much à la Darwin, is that any given technology has many designers with different ideas —which produces many variations. Of these variations, some are selected for their superior performance and pass on their small differences to future designs. The steady accumulation of such differences gives rise to novel technologies and the result is evolution.

This sounds plausible, and it works for already existing technologies —certainly the helicopter and the cellphone progress by variation and selection of better designs. But it doesn't explain the origin of radically novel technologies, the equivalent of novel species in biology. The jet engine, for example, does not arise from the steady accumulation of changes in the piston engine, nor does the computer emerge from accumulated changes in electromechanical calculators. Darwin's mechanism does not apply to technology.

(...)

To start with, we can observe that all technologies have a purpose; all solve some problem. They can only do this by making use of what already exists in the world. That is, they put together existing operations, means, and methods —in other words, existing technologies— to do the job.

(...)

So novel technologies are constructed from combinations of existing technologies. While this moves us forward, it is not yet the full story. Novel technologies (think of radar) are also sometimes created by capturing and harnessing novel phenomena (radio waves are reflected by metal objects). But again, if we look closely, we see that phenomena are always captured by existing technologies —radar used high-frequency radio transmitters, circuits, and receivers to harness its effect. So we are back at the same mechanism: novel technologies are made possible by —are created from— combinations of the old.

In a nutshell, then, evolution in technology works this way: novel technologies form from combinations of existing ones, and in turn they become potential components for the construction of further technologies. Some of these in turn become building blocks for the construction of yet further technologies. Feeding this is the harnessing of novel phenomena, which is made possible by combinations of existing technologies.

This mechanism, which I call combinatorial evolution, has an interesting consequence. Because new technologies arise from existing ones, we can say the collective of technology creates itself out of itself. In systems language, technology is autopoietic (from the Greek for "self-creating"). Of course, technology doesn't create itself from itself all on its own. It creates itself with the agency of human beings, much as a coral reef creates itself from itself with the assistace of small organisms.

[Tue Sep 8 11:14:19 CEST 2009]

I recently got an EeePC 901 as a gift that came installed with a regular version of Windows XP. I assume these systems come pre-installed with a version of Windows specially designed for Netbooks, but am not sure. The case is that this particular machine originally came pre-installed with a copy of Xandros and someone decided to remove it and install a pirated copy of Windows. As soon as I got it in my hand, I knew I wanted to install something else, of course. In the end, I downloaded a copy of Ubuntu Netbook Remix with the Ubuntu 9.04 Jaunty Jackalope packages. It was a breeze to install and, more to the point, absolutely everything appeared to run out of the box, including the wireless adapter. I've had no problem whatsoever to connect to my home network using 128-bit WEP, the webcam also runs fine with Cheese, which is the application installed by default. The same applies to everything else. I just added a few Firefox extensions I use a lot, and also installed a few programming applications via Synaptic. The battery is normally lasting around four hours or so, which is not bad. Of course, the small keyboard is sort of annoying sometimes and the screen resolution is nothing to call home for, but it's all pretty decent for the prize. All in all, it's been a great experience. Here is a screenshot of the main window.

As you can see, the overall approach to the GUI is slightly different, which I appreciate. The version of Windows XP that was installed on the system before had the very same GUI as a regular laptop or desktop PC, and that truly doesn't work on such tiny devices. The main interface occupies the whole desktop and, as soon as one launches an application, it opens up and it also occupies the whole desktop. In order to switch between tasks one has to either use the traditional Ctrl+Tab key combo or simply click on the icons that you can see on the upper left-hand corner, where it shows that I have opened a terminal as well as two different Firefox windows. On the right, next to the battery widget, you can see the Dropbox icon. Installing their software was pretty easy too. I just used the regular version for Ubuntu 9.04. {link to this story}

[Fri Sep 4 15:18:10 CEST 2009]

One of the things I truly like about Linux and open source in general is that there are always plenty of choices out there for whatever you want to do. One is not limited to a single desktop environment, a single application to do this or that, not even a single sound system. Sure, it gets confusing at times, but one never has that strange feeling of being locked up into a particular product in order to satisfy the interests of a big corporation, as it happens in the case of Windows or Apple users.

Yet, there are things that I still find hard to understand. It's fine by me if anyone decides to set up his own Linux distribution in a whim. It just adds to the ecosystem. However, I dislike it when certain people drink the Kool-Aid and start ranting about a tiny-itty-bitty distro that supposedly is the best invention since sliced bread. I've seen that happen to Slackware users sometimes. Some of them seem to have this holier-than-thou attitude according to which they represent the purest form of Linux user, the ones who truly know what they are doing, the ones who cannot be bothered with ease of use and other minor things (disclaimer: I stress that only some of them behave in this manner, and my statements shouldn't be applied to every single Slackware user out there). So, I read with some interest an interview with Eric Hameleers, coordinator of Slackware's port to 64-bit. As I said, if a limited amount of people like to continue developing a particular distribution because they feel attached to it, like it a lot or it just does what they need, that's fine. More power to them. I just hope they realize that a product aimed at a selected minority of users truly can never spread much and will definitely never convert users from other operating systems. In other words, they have to come to terms with the idea that their favorite distribution truly is a dead-end for the Linux community at large. Why do I say all this apropos the interview with Eric Hameleers? Well, for starters Slackware is one of the last Linux distributions to actually support 64-bits. But then there are also some of the things that he openly says:

So, the installer is a one-man's show that doesn't scale and the 64-bit distro doesn't run anything but pure 64-bit apps in a pure 64-bit environment, when everybody has heard of the problems running a truly pure 64-bit environment for real everyday use. Sure, the decisions are as respectable as anything else, but certainly not something that will make it easy for the distro to reach the mass market.Another issue I faced was the installer. Historically, Slackware's installation environment has been hand-crafter by Pat [Patrick Volkerding, founder and maintainer of Slackware] with contributions from others. This meant there was nothing available that would allow me to create an installer from scratch. With the help of Suart Winter (who wrote an installer for his ARMedslack port earlier on) and Pat himself, the three of us managed to work out a method for creating identical installers for the x86, x86_64 and ARM platforms. This was crucial because it allowed us to keep the installer stable while we added a whole lot of both small and big improvements.

(...)

There is one other thing worth mentioning about Slackware64. Out of the box, this is a pure 64-bit operating system —it is not capable of running or compiling 32bit binaries. This sets it apart from slamd64, for instance, which has full multlib support. This was a conscious decision, partially based on the viewpoint that there is a 32bit Slackware for those who need it, but at the same time we strived to make Slackware64 "multilib-ready". Only a few easy steps are required for adding full multilib capability to Slackware64. The procedure is documented in an article I wrote for my Wiki. It is possible that a future release of Slackware64 will be multilib. That is Pat's call however.

So, why care about Slackware? Hameleers explains it:

I've always have two problems with this attitude: one, it is just as possible to perform a minimal install of any other major distro and move on from there, customizing the whole system the way you want to; and two, if being in control truly is your thing, why not try something like Linux From Scratch or Gentoo, that give you even more control than Slackware? As I said, I don't care what your drug of choice is but, please, don't try to convince me that harder is always better just for the sake of it. Is it something you want to do as a learning project? That's fine with me then. {link to this story}To me, Slackware's philosophy has a different angle that sets it apart from all others. To this day, Slackware has an extremely lean design, intended to make you experience Linux the wat the software authors intended. This is accomplished by applying patches as little as possible —preferably for stability or compatibility reasons only. Slackware's package manager (yes, it has one, pkgtools!) stays out of your way by not forcing dependency resolution. And the clean, well-documented system scripts (written in bash instead of ruby) allow for a large degree of control over how your system functions. Slackware does not try to assume or anticipate. The installer is still console-based, but it uses dialogs, menus and buttons nevertheless. Not depending on X during installation, Slackware's installer is rock-solid, a statement which I can not repeat for the other distros I use. When you login for the first time after a fresh install you will end in the console instead of X. No assumptions are being made about what your intended use for Slackware is. This comes as a shock to many unsuspecting users, but it is the start of a learning experience.

I am well aware that the above statements are often perceived as negative, but in fact they make Slackware into a versatile tool that is adaptable to many needs. And yet, like any modern-day Linus distro, it fully recognizes and utilizes your hardware by virtue of the same kernel, Hal, D-Bus, X.Org and a truckload of other applications that the big distros ship as well. Slackware does not live in the stone age of computing. It is strong and thrives. It is lean and speedy.

The testimonials of "converted" Slackware users at LinuxQuestions.org and other forums show that Slackware's philosophy of giving full trust to the system admin is an eye-opener to people who struggled with the other distros before. This continuous influx of "converts" is one of the reasons that Slackware has not disappeared into oblivion. Slackware assumes you are smart! This appeals to people.

[Fri Sep 4 15:10:19 CEST 2009]

A couple of curiosities. A friend sent me a link to some assembly code that corresponds to equations used for some lunar landing mission and line 666 of the source file reads, literally:

A mysterious number indeed! Hehe.GAINBRAK,1 # NUMERO MYSTERIOSO

The same friend also sent me a link to a website from a Polish IT employee who managed to mimick the 4Dwm desktop environment used by SGI's IRIX operating system using web standards. The end result is pretty neat. Check it out. Just hit any key and let the website "reboot". Then click around on the different menu entries and icons. The behavior truly is similar to the one you see on 4Dwm. {link to this story}

[Thu Sep 3 16:31:05 CEST 2009]

GMail experienced an outage a couple of days ago that, as it could be expected, immediately made it to the online news outlets all over the world. Actually, I learned about it from my friends' status updates on Facebook. So, what happened? The Official GMail Blog tells us the story behind the outage:

Of course, that explains why I didn't notice anything at all. I use mutt to access the GMail servers via IMAP and very rarely touch the web interface.Here's what happened: This morning (Pacific Time) we took a small fraction of Gmail's servers offline to perform routine upgrades. This isn't in itself a problem —we do this all the time, and Gmail's web interface runs in many locations and just sends traffic to other locations when one is offline.

However, as we now know, we had slightly underestimated the load which some recent changes (ironically, some deisgned to improve service availability) placed on the request routers —servers which direct web queries to the appropriate Gmail server for response. At about 12:30 pm Pacific a few of the request routers became overloaded and in effect told the rest of the system "stop sending us trafic, we're too slow!". This transferred the load onto the remaining request routers, causing a few more of them to also become overloaded, and within minutes nearly all of the request routers were overloaded. As a result, people couldn't access Gmail via the web interface because their requests couldn't be routed to a Gmail server. IMAP/POP access and mail processing continued to work normally because these requests don't use the same routers.

However, this whole incident made me reconsider that old assumption that moving to web apps should put an end to all the traditional upgrade troubles. Sure, it certainly minimizes them. One is far more prone to run into problems when upgrading a regular desktop application —not to talk about a whole operating system!— than a web app. Still, the GMail outage —and this is not the first one they experience— comes to prove that the same problem can also affect web apps. A world where most people run applications in the cloud (are we there yet?) may certainly increase the chances of massive outages affecting millions of users... and we all know how much certain terrorist networks like that idea. It's a scary thought. {link to this story}

[Thu Sep 3 14:12:43 CEST 2009]

Apache, the HTTP server, is one of those pieces of software that has been around for so long and runs so well that we pay little attention to it anymore. Every now and then, the Apache Software Foundation releases a new version, the Linux distributions out there ship it and... end of story. Sure, there is the occasional issue with PHP compatibility or one may have to wait for certain modules to be written, but in general it has been a very smooth ride for years now. So, it's always interesting to see that the technology news media out there pays attention to Apache every once in a while. For example, Linux Magazine recently published a piece about the ten things you didn't know that Apache 2.2 could do that sort of makes for an interesting read. Among the most important new features: Server Name Indication (SNI), which makes it possible to run multiple SSL virtual hosts on a single IP address, the addition of a mod_substitute module to modify the response that is being sent to the web client using regular expressions, as well as the concept of a graceful stop (i.e., the ability to stop the daemon process without interrupting ongoing transactions). Nothing earth-shattering, to be sure, but this is a very mature product. {link to this story}

[Tue Sep 1 12:51:27 CEST 2009]

Now, this is interesting. Information Week informs us that IBM has patented a Facebook remote control that should allow TV viewers to automatically blog about whichever content they happen to be viewing on the TV set by using a specifically designed remote control.

I have definitely come across many instances where people discuss a TV show on Facebook or Twitter. However, I am not so sure the idea will succeed in the end. It definitely won't be the first time that companies have tried to introduce interactive behaviors to the TV viewing experience, and it has never worked so far. It almost seems as if we expect to go through a passive experience when we decide to sit in front of the tube. After all, I wonder how many people just slouch in their favorite couch at the end of the day and turn on the TV while taking a rest, without truly caring much for what they are showing. Besides, plenty of people already watch TV while typing on their laptops. In other words, the computer experience has moved from desktops to laptops to a great extent. Thus, the new IBM remote may not improve things much, to be honest. At least not enough to be bothered with the purchase of a new product. {link to this story}The patent goes on to describe how the system "allows a viewer to autoblog about currently experienced media programming in real-time without having to resort to direct interaction with a computer to perform the autoblogging."

IBM apparently envisions a system that would facilitate two-way blogging while users are watching TV. "One of the joys of watching television is discussing with one's friends the juicy bits of a favorite show or the latest television program," the patent documents note.

"The enhanced remote controller allows the viewer to both communicate with a blogging server, and thus to a blogging service, as well as display responses to and from other bloggers with whom the viewer is communicating,", the application states.