[2023]

[2022]

[2021]

[2020]

[2019]

[2018]

[2017]

[2016]

[2015]

[2014]

[2013]

[2012]

[2011]

[2010]

[2009]

[2008]

[2007]

[2006]

[2005]

December

November

October

September

August

July

June

May

April

March

February

January

[2004]

[2003]

[Thu Dec 29 14:35:18 CST 2005]

Earlier today I was reading through the Edge website, and came across an old interview with Scott McNealy, Sun's CEO, that included the following comment:

Right on, Scott! I have been telling this to friends a co-workers for ages now. Bob Bishop et alii are still betting on the idea that there is indeed such a thing as a niche market in the computer business, and firmly believe that they can turn around the company by catering solely to the high-performance market. I am convinced their strategy is wrong. They constantly mention Rolls Royce, Porsche, Ferrari and others as good examples of companies that managed to carve a niche and survive in a highly competitive world. They always miss an important fact: none of those companies remains an independent company. In other words, they are all part of a larger company that, at one point or another, bought them. Yes, it is possible to make money out of a niche market in the computer business, but only as long as you are part of a larger organization that will spread the costs, as McNealy points out. Apparently, Bishop and his team did not realize this yet. {link to this story}Silicon Graphics is going to make a nice division, a the very high end, of one of the Big Three computer businesses at some point. You might think of SGI as the sport-utility vehicle of the computer industry. The product line could be very profitable, but needs to be part of a larger organization. There aren't going to be niche companies in the computer business in the same way that there aren't any car companies that specialize in two-door sedans or four-door convertibles. You've got to be a broadly global player with volume. Scale really matters in our business.

[Tue Dec 27 10:43:01 CST 2005]

Although, as I have written in this blog a few times already, Linux still has some ways to go before it becomes your regular user's home desktop (especially when it comes to multimedia issues), it is nevertheless obvious that it has made huge strides compared to what it was just a few years ago. This year, for Christmas, I got a 512MB USB thumb drive (yeah, I know, a little bit behind the curve). So, I just plugged it into my Ubuntu 5.10 (Breezy Badger) system and... voilá! I did not have to do absolutely anything to get it to work! Here is the info that showed up in the system logs:

Dec 27 10:25:03 frankfurt kernel: [8414693.959000] usb 1-1: new full speed USB \

device using uhci_hcd and address 3

Dec 27 10:25:03 frankfurt kernel: [8414694.075000] scsi3 : SCSI emulation for \

USB Mass Storage devices

Dec 27 10:25:04 frankfurt usb.agent[29768]: usb-storage: already loaded

Dec 27 10:25:08 frankfurt kernel: [8414699.082000] Vendor: Memorex Model: \

TD Classic 003C Rev: 1.04

Dec 27 10:25:08 frankfurt kernel: [8414699.082000] Type: Direct-Access

ANSI SCSI revision: 00

Dec 27 10:25:09 frankfurt kernel: [8414699.756000] SCSI device \

sdb: 1003520 512-byte hdwr sectors (514 MB)

Dec 27 10:25:09 frankfurt kernel: [8414699.759000] sdb: Write \

Protect is off

Dec 27 10:25:09 frankfurt kernel: [8414699.774000] SCSI device \

sdb: 1003520 512-byte hdwr sectors (514 MB)

Dec 27 10:25:09 frankfurt kernel: [8414699.777000] sdb: Write \

Protect is off

Dec 27 10:25:09 frankfurt kernel: [8414699.777000] \

/dev/scsi/host3/bus0/target0/lun0: p1

Dec 27 10:25:09 frankfurt kernel: [8414699.787000] Attached \

scsi removable disk

sdb at scsi3, channel 0, id 0, lun 0

Dec 27 10:25:09 frankfurt scsi.agent[29815]: sd_mod: \

loaded sucessfully (for disk)

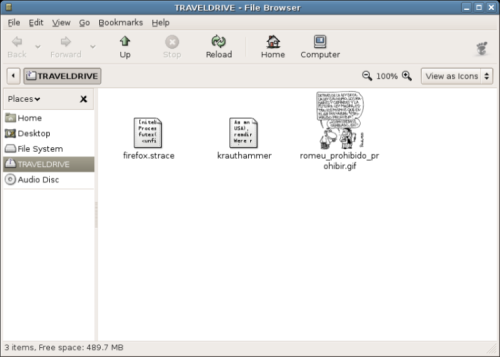

... and here is a screenshot of Nautilus, which popped up right away:

By the way, it came pre-formatted as a VFAT filesystem by default:

I just had to test it under MacOS X where it also worked flawlessly, so now I can finally bring all my files with me and use them both in Linux and MacOS X. I am a happy man. {link to this story}[nitebirdz@frankfurt:]$ mount | grep TRAVEL /dev/sdb1 on /media/TRAVELDRIVE type vfat (rw,nosuid,nodev,quiet,shortname=\ winnt,uid=1000,gid=1000,umask=077,iocharset=utf8)

[Tue Dec 20 10:49:05 CST 2005]

A good friend sent me a link to a Google Help Cheat Sheet that should prove quite useful. I did not

know, for instance, that one could use the

[Sat Dec 17 20:04:34 CST 2005]

Perusing Kernel Traffic,

I noticed an email sent by Ben Collins to the Linux kernel mailing list announcing that

the Ubuntu kernel is now maintained in

The idea of including as many external drivers as possible in the tree sounds appealing, especially after hearing from friends every now and then how they needed to recompile the kernel in order to get such and such device and/or feature to work. The Ubuntu guys are definitely pushing the envelope, and making it easier to build a Linux distribution that can truly be used on the desktop. It was about time. {link to this story}

- A kernel geared toward a real world Linux distribution, supporting drivers and subsystems that end users need. You will find a lot of external drivers in our tree, that for whatever reason, are not included in the upstream kernel. We hope that including these drivers will give users a one-stop kernel (no downloading and compiling external modules), and also provide much needed testing for modules hoping to be included into the mainstream kernel.

- Real world configurations. We will provide default kernel configs for a variety of architectures and system "flavors".

- Any feature and/or driver included will attempt to be configurable. That is, if you don't select to compile it, it will not cause any significant changes fromt he stock kernel we are using at that point.

- Open development model. We want to be as close to the kernel community as possible. Integrating ideas, getting feedback, and causing as little havoc as possible :)

[Sat Dec 17 19:46:41 CST 2005]

With all the positive comments one hears about outsourcing software development to India (well, sure, I am referring to the comments made by certain top managers here), it came as a surprise to read Jonathan Corbet's report of the FOSS.IN event published in last week's LWN. Here are a few nuggets to keep in mind when considering a move to Bangalore:

Hmmm. Interesting, I think. A stereotype, of course, but there may be some kernel of truth in it, as it tends to be the case with all stereotypes. In any case, Corbet goes on to discuss why this could perhaps be the ultimate cause behind the lack of involvement of Indian developers in free software projects in general.Neeti's talk [Neetibodh Agarwal, a developer with Novell] described Indian developers as needing to have their jobs laid out to them in great detail. They want to know where their boundaries are, and are uncomfortable if left to determine their own priorities and approaches. Your editor's [i.e., Jonathan Corbet himself] initial reaction was that this claim sounded like classic talk from a pointy-haired boss who does not trust his employees to make decisions. Subsequent discussions backed up Neeti's claims, however. A few Indians told me that Indian employees require a high degree of supervision; perhaps that is why the pizza stand at the site required two-levels of necktie-wearing bosses who apparently did little to actually get pizza into the hands of conference attendees. It is not that Indians lack the intelligence to function without a boss breathing down their neck —that is clearly not the case— but all of their training tells them to work in that way.

So if one were to construct a stereotypical picture of an Indian software developer, it would depict a person who sees programming very much as a job, and not as an activity which can be interesting or rewarding in its own right. This developer is most interested in getting —and keeping— a stable job ina country where an engineering career can be a ticket to a relatively comfortable middle-class existence. Keeping that job requires keeping management —and coworkers— happy, and not rocking the boat.

{link to this story}For such a developer, the free sofware community is not a particularly attractive or welcoming place. A developer who contributes to a free software project may earn a strong reputation in the community, but that reputation is not appreciated by that developer's employer or co-workers, and is not helpful for his or her career. Criticism from the community —even routine criticism of a patch by people who appreciate the developer's contributions in general— can be hurtful to a career in a culture where open criticism is not the normal way of doing things. Developers who expect to have their job parameters laid out to them in detail may feel lost in a project where they are expected to find something useful to do, and push it forward themselves. And these developers, while being possibly quite skilled in what they do, often have no real passion for programming, and leave it all behind when they leave the office each day.

[Sat Dec 17 16:32:30 CST 2005]

InfoWorld published an article a couple of days ago about the differences between Red Hat an Novell written by a Tom Burke who, apparently, used to work for Novell and dealt directly with Red Hat employees quite often. Pardon my skepticism, but I find it unbelievable that anyone out there can seriously claim that both companies are somehow converging towards a middle ground, and Burke's main point appears to be precisely that. Actually, to me his most amazing argument is the following:

Yes, I know, this particular paragraph I just quoted clearly contradicts what I explained above as the main point of the author's article. What can I do? The piece truly is as messy as it sounds, in spite of the attention it has attracted. In any case, I just cannot believe anybody out there can seriously argue that the "open source ecosystem" has been led to Novell. Excuse me? Do we live in the same world? If there is a Linux distro out there that has never made any welcoming gestures towards the open source community is precisely SUSE, although back in the days Caldera was, without any doubt, the winner in this category. And if anybody doubts what I am saying, he just needs to go in search of a SUSE community to speak of. It is, for the most, non-existent, especially when compared to Red Hat and Fedora. I am not sure what Burke was smoking, but his article certainly displays a whole lot of wishful thinking. {link to this story}Red Hat has a hard-charging, take-no-prisoners approach to the market. If you're not making them money, you're not going to get their ear. At times, because of how tightly Szulik runs the ship, they simply didn't have enough employees to be able to service all the demand, causing people outside the company to view Red Hat as aloog and arrogant.

This has led the growing open source ecosystem to Novell, which is partner-centric and easy-going almost to a fault. Ron Hovsepian is changing this, and Novell is starting to become much more choosy about opportunities (customer and partnering) that come its way. The company's culture is changing for the better along with this shift in opportunity mindset. Novell is becoming less concerned with popularity and more concerned with dollars.

[Fri Dec 16 12:12:09 CST 2005]

While perusing the latest issue of Dr. Dobb's Journal, I found an article by Guy L. Steel Jr. on language design that brings up some interesting issuses about the old paradigm of structured programming:

Back in the days when I started playing with computers (sometime in the early 1980s), structured programming in BASIC is what there was, at least if you were a kid just starting to learn these things on a Sinclair ZX Spectrum. So, to a great extent, my mindset is there. To this day, when I think about a way to solve a problem in code I tend to take the structured approach. However, I can see the author's point. We live in a world where most computers are networked together, and increasingly we are seeing SMP systems all over the place. It would make sense if our programming tecniques adapt to that reality, making it easier to live in a world where parallelism is our everyday meal. As a matter of fact, reality has indeed always been this way. We just did not have the technology to allow us to approach the problems in this way, but real life has never been structured. A structured reality has never been anything but a figment of our imagination. We just agreed to act as if, and went on with our lives. {link to this story}Sequencing implies a single thread of control, ordering actions in time. In the future, we may need languages that better support multiple threads of control and deal with the consequences of unordered actions.

If-then-else makes a binary choice, which is fine when there are really exactly two cases to consider; but often there are more than two possibilities, in which case if-then-else imposes a kind of "Boolean bottleneck" that requires complex case analysis to be reduced to a tree of binary decisions. This decision tree then imposes an arbitrary time ordering of decisions that may not be relevant to the description of the computation.

Loops connote sequential execution of successive iterations, and furthermore rely on side effects in the loop body to make progress, making it difficult for a compiler to recognize potential parallelism.

(...)

What might a language look like in which parallelism is the default? How about data-parallel languages, in which you operate, at least conceptually, on all the elements of an array at the same time? These go back to APL in the 1960s, and there was a revival of interest in the 1980s when data-parallel computer architectures were in vogue. But they were not entirely satisfactory. I'm talking about a more general sort of language in which there are control structures, but designed for parallelism, rather than the sequential mindset of conventional structured programming. What if do loops and for loops were normally parallel, and you had to use a special declaration or keyword to indicate sequential execution? That might change your mindset a little bit.

[Tue Dec 13 20:35:56 CST 2005]

About a month ago, I came across a piece about Ubuntu on a business desktop that got me thinking whether perhaps the long-awaited moment when your run-of-the-mill user can finally use Linux at work is here. The author gives a very positive review of Ubuntu Linux but, being fair and taking a look at the whole picture, one notices that he ran into some issues when mounting Samba filesystem using the GNOME utility to mount shares locally (by the way, it turned out to be a problem with Samba and not GNOME, for all the GNOME-haters out there), Evolution's compatibility with MS Exchange was not as smooth as he had hoped for, PPTP had a bug that prevented it from working with Windows 2003 Server using an unencrypted connection and OpenOffice's new database application proved to be quite limited. In other words, that in spite of the author's overall positive ratings, a serious and objective read of his article leads one to believe that Ubuntu in particular (and Linux in general) still have a way to go before beign able to run smoothly in a small shop, at least one where there are still Windows machines and there is no in-house guru to give a hand. To all this, I would add my own experience when I tried to convince a co-worker to install Ubuntu at work, and he was quite disappointed to find out that the installer never prompted him to configure the system as a NIS client. Even worse, other than directly editing the files there was no easy way to accomplish this using any GUI application. Even an email sent to the usually extremely useful ubuntu-users mailing list did not help, or at least nobody replied to my email at any rate. Mind you, I love Ubuntu. I have been running it for over a year now, it is without any doubt my favorite distro, and I always recommend it to my friends. Even more, it is obvious to me that, right now, this is the only distribution I would recommend to a newbie I am trying to convert to Linux. I do run Linux on a daily basis, both at home and at work, and I simply could not live without it. Still, it seems clear to me that it still has some run for improvement before it is widely adopted everywhere. In the meantime, Ubuntu is for sure a winner, especially in environments where it is not necessary to interact with other Windows systems or where the file servers are running on UNIX or Linux.

By the way, if, like me, you are also an Ubuntu fan, you may want to check out this article about the ten most popular Ubuntu sites on the net. The Ubuntu Blog is one of my favorites, although the name is a little bit misleading for it does not contain rants about this or that feature but rather hints and tips to better use your Ubuntu system. For one reason or another, the list does not include Planet Ubuntu, which I like to read periodically too. {link to this story}

[Thu Dec 8 16:02:07 CST 2005]

A good friend sent me this morning link to a pretty funny document listing the top ten weirdest USB drivers ever. It is all there: the iduck, the Big Tiki Drive, the sushi drive and, of course, the Barbie USB drive! Pretty hilarious, in a geeky type of way. {link to this story}

[Tue Dec 6 08:30:15 CST 2005]

A couple of things I just came across of this morning. First of all, The Register publishes a review of The Linux Kernel Primer, a book that introduces the main elements of the kernel to the readers: processes, memory managament, I/O, filesystems, scheduler... The reviewers did not appear to like the book much, but it may still be an interesting read. Then, I found somewhere else a link to FreeMind, an opensource mapping software written in Java that may come in handy to jot down notes and ideas during your next brainstorm. {link to this story}

[Fri Dec 2 15:41:52 CST 2005]

Call me a sentimental, but I did not like it when I read the recent LWN's piece on the end of USENET. I know, few people use it these days. I know, web forums are all the rage, and we also have mailing lists, not to talk about RSS to always keep up with the greatest and latest. Still, as the author acknowledges himself it would be hard to keep up with so many lists if it were not for the Gmane repository which, after all, is NNTP-based. To most people, the Internet is just a synonym for the Web, to be honest. The vast majority of non-techies out there (and even a considerable amount of techies) just use their browsers and email clients. That's about it. They know little or nothing about FTP or NNTP, but that does not mean they never use it, although they may not even be aware of it. After all, how many times do we find links in web documents pointing to FTP servers so we can download a large file? How many users know that they are using a different protocol at that very moment? Come on, truly. How many users even realize they are not using the Web at that precise instant? To most users, it is just the Web, and the same applies to all those who use Google Groups. They are not even aware they are using a service that relies on NNTP. Still, that does not change the fact that the protocol, the infrastrucure is there, and it is still useful. To argue, as the author of the story does, that "the signal-to-noise ratio of USENET sunk to a point that most people had no remaining desire to deal with" is quite misleading. Should we also do away with email because of all the spam and phishing going on? Personally, I see far more of this noise when checking my email account than we perusing the newsgroups out there, as long as I stay away from the porn groups, of course, but then that is definitely not NNTP's problem, is it? {link to this story}